방명록

- VGGNet을 사용한 이미지 분류기 실습2024년 03월 19일 05시 33분 45초에 업로드 된 글입니다.작성자: 재형이반응형

실습 목표

- VGGNet을 사용하여 이미지를 학습하고 10개의 카테고리를 갖는 이미지를 분류하는 이미지 분류기를 생성한다. (데이터셋: CIFAR)

- Pre-training 모델의 사용방법을 이해한다.

문제 정의

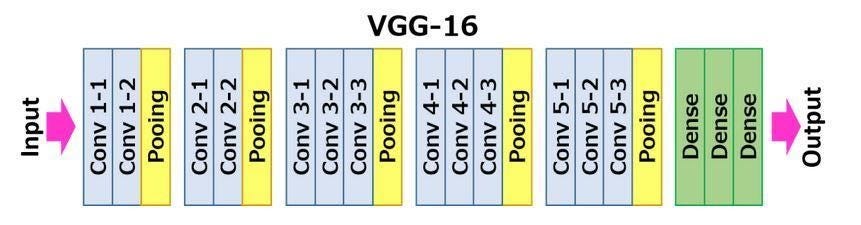

- VGGNet 구조 살펴보기

주요 코드

1. VGGNet

# Model cfg = { 'VGG11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'], 'VGG13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'], 'VGG16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'], 'VGG19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'], } class VGG(nn.Module): def __init__(self, vgg_name): super(VGG, self).__init__() self.features = self._make_layers(cfg[vgg_name]) self.classifier = nn.Sequential( nn.Linear(512 * 1 * 1, 360), nn.ReLU(inplace=True), nn.Dropout(), nn.Linear(360, 100), nn.ReLU(inplace=True), nn.Dropout(), nn.Linear(100, 10), ) def forward(self, x): out = self.features(x) out = out.view(out.size(0), -1) out = self.classifier(out) return out # 'VGG11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'], def _make_layers(self, cfg): layers = [] in_channels = 3 for x in cfg: if x == 'M': layers += [nn.MaxPool2d(kernel_size=2, stride=2)] else: layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding=1), nn.BatchNorm2d(x), nn.ReLU(inplace=True)] in_channels = x return nn.Sequential(*layers)2. Pretrained model

from torchvision import models vgg16 = models.vgg16(pretrained=True) vgg16.to(DEVICE) loss = nn.CrossEntropyLoss() optimizer = torch.optim.SGD(vgg16.classifier.parameters(), lr = LEARNING_RATE, momentum=0.9)CIFAR Classifier(VGGNet)

- CIFAR 데이터셋을 사용하여 이미지에 포함된 object가 무엇인지 분류하는 이미지 분류기를 생성해봅니다

[Step1] Load libraries & Datasets

import numpy as np import matplotlib.pyplot as plt import torch from torch.utils.data import DataLoader from torch import nn from torchvision import datasets from torchvision.transforms import transforms from torchvision.transforms.functional import to_pil_image[Step2] Data preprocessing

- 불러온 이미지의 증강을 통해 학습 정확도를 향상시키도록 합니다.

- RandomCrop

- RandomHorizontalFlip

- Normalize

transform = transforms.Compose([ transforms.ToTensor(), transforms.Resize((224, 224)), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), ]) train_img = datasets.CIFAR10( root = 'data', train = True, download = True, transform = transform, ) test_img = datasets.CIFAR10( root = 'data', train = False, download = True, transform = transform, )- Downloading https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz to data/cifar-10-python.tar.gz

100%|██████████| 170498071/170498071 [00:05<00:00, 29536832.50it/s]

Extracting data/cifar-10-python.tar.gz to data

Files already downloaded and verified

[Step3] Set hyperparameters

EPOCH = 10 BATCH_SIZE = 32 LEARNING_RATE = 1e-3 DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu") print("Using Device:", DEVICE)- Using Device: cuda

[Step4] Create DataLoader

train_loader = DataLoader(train_img, batch_size = BATCH_SIZE, shuffle = True) test_loader = DataLoader(test_img, batch_size = BATCH_SIZE, shuffle = False)[Step5] Set Network Structure

# Model cfg = { 'VGG11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'], 'VGG13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'], 'VGG16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'], 'VGG19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'], } class VGG(nn.Module): def __init__(self, vgg_name): super(VGG, self).__init__() self.features = self._make_layers(cfg[vgg_name]) self.classifier = nn.Sequential( nn.Linear(512 * 1 * 1, 360), nn.ReLU(inplace=True), nn.Dropout(), nn.Linear(360, 100), nn.ReLU(inplace=True), nn.Dropout(), nn.Linear(100, 10), ) def forward(self, x): out = self.features(x) out = out.view(out.size(0), -1) out = self.classifier(out) return out def _make_layers(self, cfg): layers = [] in_channels = 3 for x in cfg: if x == 'M': layers += [nn.MaxPool2d(kernel_size=2, stride=2)] else: layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding=1), nn.BatchNorm2d(x), nn.ReLU(inplace=True)] in_channels = x return nn.Sequential(*layers)[Step6] Create Model instance

model = VGG('VGG16').to(DEVICE) print(model)- VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(7): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): ReLU(inplace=True)

(10): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(12): ReLU(inplace=True)

(13): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(14): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(16): ReLU(inplace=True)

(17): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(19): ReLU(inplace=True)

(20): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(21): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(26): ReLU(inplace=True)

(27): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(28): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(29): ReLU(inplace=True)

(30): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(31): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(32): ReLU(inplace=True)

(33): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(34): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(35): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(36): ReLU(inplace=True)

(37): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(38): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(39): ReLU(inplace=True)

(40): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(41): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(42): ReLU(inplace=True)

(43): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=512, out_features=360, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=360, out_features=100, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=100, out_features=10, bias=True)

)

)

[Step7] Model compile

loss = nn.CrossEntropyLoss() optimizer = torch.optim.SGD(model.parameters(), lr = LEARNING_RATE, momentum=0.9)[Step8] Set train loop

def train(train_loader, model, loss_fn, optimizer): model.train() size = len(train_loader.dataset) for batch, (X, y) in enumerate(train_loader): X, y = X.to(DEVICE), y.to(DEVICE) pred = model(X) # 손실 계산 loss = loss_fn(pred, y) # 역전파 optimizer.zero_grad() loss.backward() optimizer.step() if batch % 100 == 0: loss, current = loss.item(), batch * len(X) print(f'loss: {loss:>7f} [{current:>5d}]/{size:5d}')[Step9] Set test loop

def test(test_loader, model, loss_fn): model.eval() size = len(test_loader.dataset) num_batches = len(test_loader) test_loss, correct = 0, 0 with torch.no_grad(): for X, y in test_loader: X, y = X.to(DEVICE), y.to(DEVICE) pred = model(X) test_loss += loss_fn(pred, y).item() correct += (pred.argmax(1) == y).type(torch.float).sum().item() test_loss /= num_batches correct /= size print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:8f}\n")[Step10] Run model

for i in range(EPOCH) : print(f"Epoch {i+1} \n------------------------") train(train_loader, model, loss, optimizer) test(test_loader, model, loss) print("Done!")CIFAR Classifier(Pretrained VGGNet)

- ImageNet 데이터로 학습한 VGGNet을 사용하여 주어진 데이터 셋에서 사용할 수 있도록 Fine tuning 해봅니다

from torchvision import models vgg16 = models.vgg16(pretrained=True) vgg16.to(DEVICE) print(vgg16)- /usr/local/lib/python3.10/dist-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead.

warnings.warn(

/usr/local/lib/python3.10/dist-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=VGG16_Weights.IMAGENET1K_V1`. You can also use `weights=VGG16_Weights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

Downloading: "https://download.pytorch.org/models/vgg16-397923af.pth" to /root/.cache/torch/hub/checkpoints/vgg16-397923af.pth

100%|██████████| 528M/528M [00:06<00:00, 87.1MB/s]

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

vgg16.classifier[6].out_features = 10 for param in vgg16.features.parameters(): param.requires_grad = False loss = nn.CrossEntropyLoss() optimizer = torch.optim.SGD(vgg16.classifier.parameters(), lr = LEARNING_RATE, momentum=0.9) for i in range(EPOCH) : print(f"Epoch {i+1} \n------------------------") train(train_loader, vgg16, loss, optimizer) test(test_loader, vgg16, loss) print("Done!")

반응형'인공지능 > 프레임워크 or 라이브러리' 카테고리의 다른 글

LSTM Classifier (0) 2024.03.21 Variational Autoencoder (0) 2024.03.20 AlexNet을 사용한 이미지 분류기 실습 (0) 2024.03.18 인공 신경망 코드로 구현해서 다중 분류해보기 (2) (4) 2024.03.17 인공 신경망 코드로 구현해서 다중 분류해보기 (1) (0) 2024.03.16 다음글이 없습니다.이전글이 없습니다.댓글